Attribute MSA will help to analyze your measurement system and strengthen with appropriate decision based on results.

Measurement system analysis (MSA) is one of the core tools in the manufacturing industry. Variations present in your measurement system will affect the quality of the product and can make a dent on your company brand.

So this detailed guide to attribute measurement system analysis will help you to strengthen your measurement system.

You can learn about the Measurement system analysis (MSA) basics in our core tool articles.

Now here is the major topic of how MSA should be performed for attribute data type.

After reading this complete guide you will learn,

What is the need for attribute MSA, what types of attribute MSA, when to use, and how to perform the attribute MSA study?

Let’s get started

what are the Measurement systems we have?

Variable MSA

- Caliper length, diameter measurement

- Cycle time measurement

- Torque measurement

- Flatness measurement

- Speed measurement

Attribute MSA

- Customer satisfaction

- Grades

- Defects (Ok, NOK)

- Gages check (G0, No-go)

- Visual defect check,

Now, we have picked here the Attribute MSA.

Purpose of Attribute Measurement System Analysis

There are below criteria for what purpose the MSA is to be performed,

Accuracy check:

To access the customer standard, need to fulfill the customer requirement.

MSA performs to identify how good is our measurement system with our masters.

Precision check:

To determine that, the inspector can measure correctly. Is he checking with the same criteria across all shifts, machines, etc… to measure and evaluate parts? Also called Repeatability.

To quantify the inspector or gauges. It means inspector or gages can accurately repeat their inspection decision. Called Reproducibility.

To determine:

To determine whether is there any training needed for the inspectors. The gages need any correction or adjustment necessary.

It is to determine where the standards are not clearly defined.

Let’s see the next point of Attribute MSA categories

3 categories of Attribute Data in MSA

As we have seen the attribute data types before, therefore it is categorized on base of data.

- For the attribute data of 2 categories – Ok-Nok / Go-NoGO

- Attribute data of 3 or more categories which are not in order Yellow-Red-Blue, Low-medium-high, etc.

- And finally, 3 or more categories which are in order – 1-2-3-4, etc.

At the base of this categories, the Attribute measurement system analysis study is conducted.

These steps should be done in attribute MSA study

- -> Plan study

- -> Conduct study

- -> Analyze and interpret results

- -> Improve Measurement System if necessary

- -> Ongoing evaluation

Step-1 Plan Study

Define Sample Size

First, in the plan study, We need to define the sample size.

Based on sample size selection our study will be analyzed.

The sample size should be 30-50 samples. Or you can take base on customer requirement.

Then the next step in the plan is a selection of samples from lot/production.

- Selected samples should cover the full range of variation in the process.

- Samples should contain at least 50/50 or 30/70 Good and Bad part combinations for better results.

- A single part should have only one defect to avoid misunderstanding.

Contributing Factors for the Attribute MSA Study

We have to think about the main contributing factors in the measurement variability,

Such as

- Operator should be trained for inspection

- A defect should be clearly understood

- The environment should be considered in the analysis, ex. Sufficient Lux level at measurement area.

Inspection work instruction

In this stage, all the study procedures are to be well-written and documented. This will be a more effective inspection and study.

Also need to consider the blind study. Operators do not know which part they are measuring.

Step-2 Conduct study

Now in this step, we need to take care of the time and place to conduct the study.

We need to define how we check parts in a blind study. Also how much time is required to inspect the part?

Finally, in conducting the study stage we need to observe the following conditions,

- Observed any process deviation in the process

- Observed any environmental changes while conducting the study.

- Errors in writing the inspection result

- Errors in the measurement methods.

- Operators differences / don’t disturb the operators

Now it’s time to measure and record the result on paper. The results should not be visible to the operator.

Let’s have a look on a practical example,

Practical Example: Attribute study (Minitab)

Your company is producing the Bearing. So you need to check the bearings with the Go-NoGo gauge. Therefore your mission is to identify the defective items.

Then you will choose samples as described in the plan stage.

You must know the exact result of the samples. This means you should know which samples are OK and NOK.

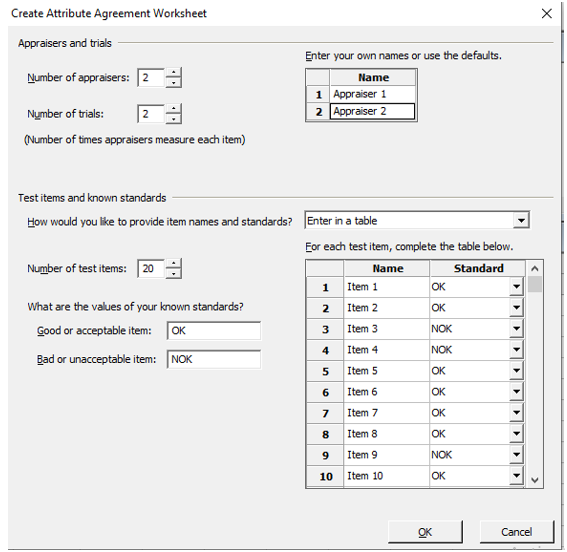

So the numbering should provide on bearing to identify the sample number. A total 30 number of samples are being tested here.

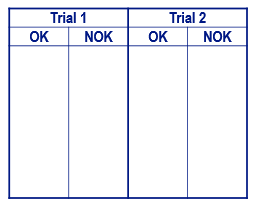

2 appraisals ( 2 Operators) will check each bearings.

Each trial will be finished in 60 seconds (3 seconds/part)

Each inspector will perform 2 trails

1st Trail

2nd Trail

The operator writes the results on the paper ( As OK and NOK).

Be careful with the order and the OK and NOK names have to be the same as the standard used in the Minitab® worksheet.

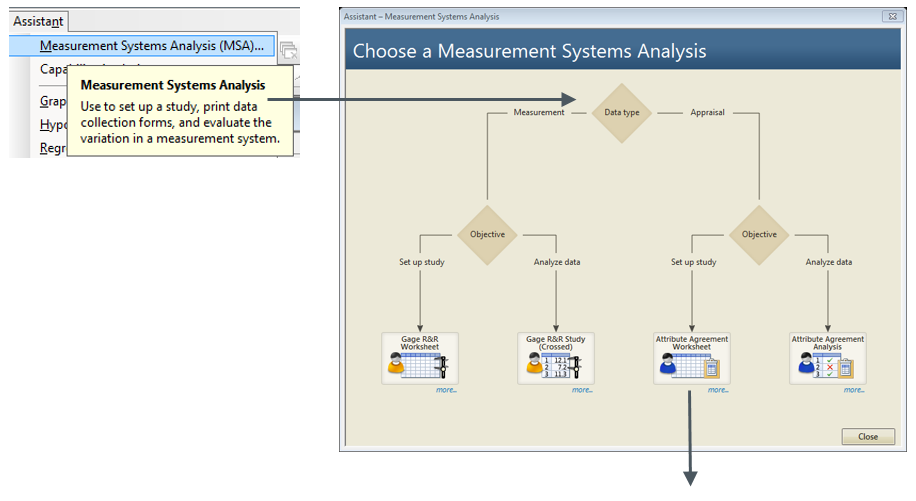

Set up the data in Minitab®

When the response is binary (ok – nok)

Use the Minitab: Assistant MSA > attribute agreement

When the response is ordinal or nominal (good, very good, bad, very bad, etc.)

Use Minitab feature > stat > quality tools > attribute agreement analysis

After clicking on OK, you get a new worksheet to fill in the result.

Below is the results sheet from the observation results by operators.

Step-3 Analyze and Interpret Results

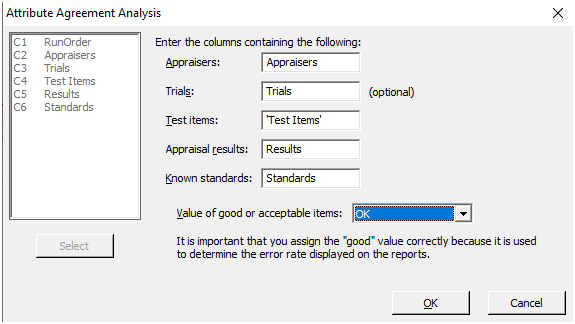

To analyze the data, go to the process shown below.

Assistant –> Measurement System Analysis –>Attribute Agreement Analysis

You will need to select the below window from the cells

After clicking on “OK” you will get the analysis windows below to interpret the results,

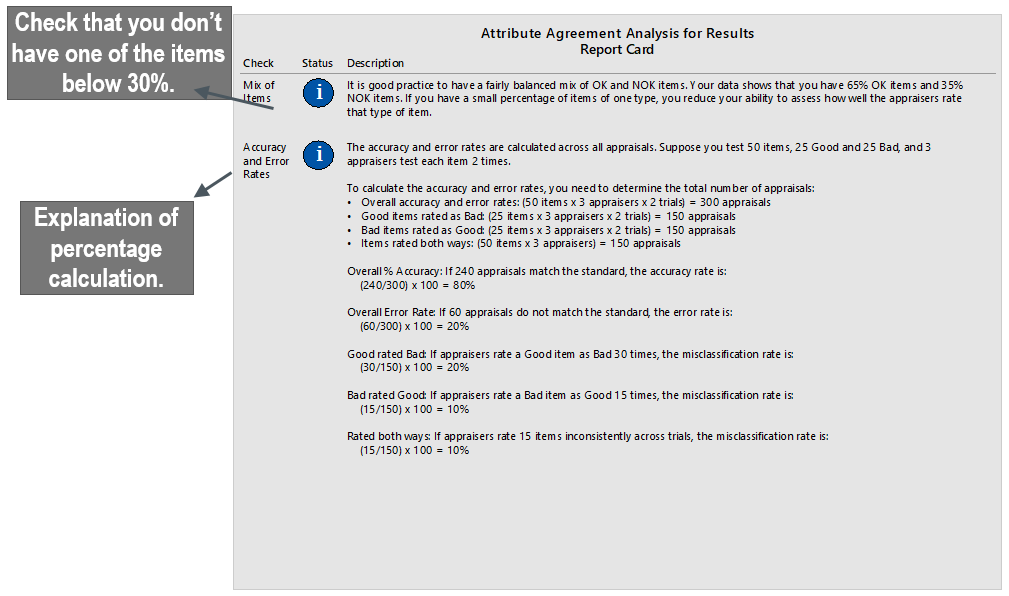

1 Report Card:

The following indicators are calculated:

- % Rated both Ways per appraiser

- % Pass rated Fail per appraiser

- % Fail-rated Pass per appraiser

- % Accuracy per appraiser

- Overall Accuracy Rate

Please refer to the screenshots of the analysis of our attribute study below,

1. % Rated Both Way Per Appraiser

What is it?

It shows the repeatability by operator, i.e. what is the ability of each operator to ALWAYS assess a single part the same way?

How to calculate?

% Rated both ways per appraiser = number of parts not assessed consistently / number of parts assessed in %.

Example: on 20 parts, the operator assessed 18 parts the same way and 2 parts sometimes OK, sometimes NOK => % Rated both way = 2/20 = 10 %.

What will expected?

% Rated both way ≤ 10%.

2. % Pass Rated Fail Per Appraiser

What is it?

It studies the frequency for each operator of assessing a part as NOK when it is OK in reality.

It represents the producer’s risk which is a risk to scrap or rework OK parts.

How to calculate?

% Pass rated Fail = number of time parts assessed NOK when it is OK / number of assessment with standard OK in %.

Example: on 8 parts with standard 0K, the operator assessed 1 part 1 time NOK for an OK standard => % Pass rated Fail = 1 / 8 * 2 = 6.25%.

What will expected?

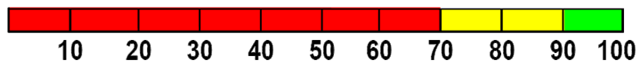

% closest to 0 (green <5%, orange <10%, red >10%)

3. % Fail Rated Pass Per Appraiser

What is it?

It gives the frequency for each operator of assessing a part as OK when it is NOK in reality.

It represents the customer’s risk which is a risk of sending NOK parts to the customer. n

How to calculate?

% Fail rated Pass = number of time parts assessed OK when it is NOK / number of assessments with standard NOK in %.

Example: on 12 parts with standard N0K, the operator assessed 1 part 2 times OK for a NOK standard => % Pass rated Fail = 2 / 12 * 2 = 8.33%.

What will expected?

% closest to 0 (green <2%, orange <5%, red >5%)

In the case of S&R characteristics, the only target is 0%

4. Accuracy Per Appraiser

What is it?

It gives the accuracy for one appraiser which is the % of his appraisals that match the standard.

How to calculate?

Accuracy per appraiser = number of appraisals that match the standard/number of appraisals in %.

Example: on 40 appraisals (20 parts 2 trials), the operator assessed 1 NOK part 2 times OK and 1 OK part one time NOK => Accuracy per appraiser = (40-3)/40 = 92.5 %.

What will expected?

Accuracy > 90 %.

5. Overall Accuracy Rate

What is it?

It measures the overall efficiency of the test.

It gives the accuracy for all appraisers that is the % of all appraisals which are matching the standard.

How to calculate?

Overall Accuracy = number of all appraisals that match the standard/number of appraisals in %.

Example: on 80 appraisals (20 parts 2 trials 2 appraisers), one operator assessed 1 NOK part 2 times OK and 1 OK part one time NOK and the other operator assessed 1 NOK part 2 times OK, 1 NOK part one time OK and 1 OK part one time NOK=> Accuracy per appraiser = (80-7)/80= 91.3 %.

What will expected?

Decision rules:

> 90% :

Excellent inspection process.

70 to 90% :

There must be an action plan, depending on how critical the inspection is. The inspection method, training process, boundary samples, and environment all should be verify and improved.

< 70% :

The inspection process is unacceptable. Reconsider it.

Analysis :

Review the repeatability portion first (% Rated both Way per appraiser), if an Appraiser cannot agree with himself, ignore comparisons to Standard and to other Appraisers and go understand why.

For Appraisers that have acceptable repeatability, review the agreement with standard (% Pass rated Fail per appraiser and % Fail rated Pass per appraiser). We will know if inspectors are well-calibrated.

For appraisers that have acceptable calibration, review their accuracy.

Finally, check overall accuracy.

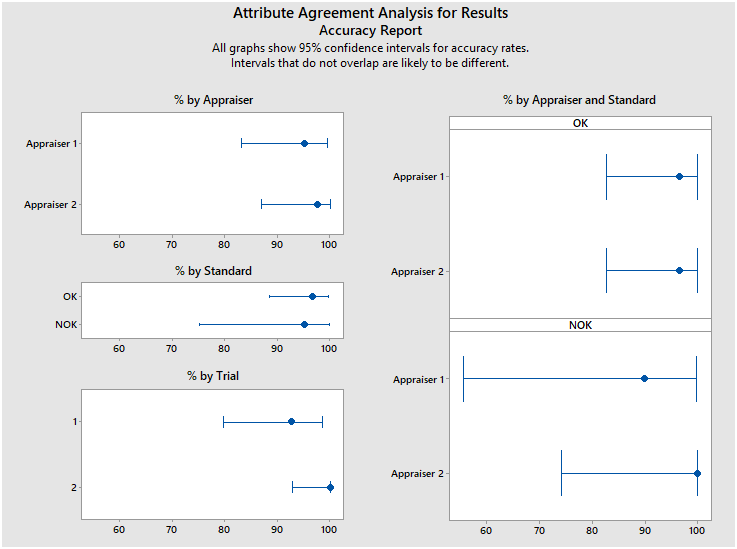

Interpret Other Graphs

Check if there is any part assessed was mixed by all appraisers or assessed consistently by all operators but not in accordance with the process.

Check any accuracy differences (between appraisers, between standards, between trials, …) to look for ways to improve.

Step 4 – Improve Measurement System

Once we have established we have a problem or several problems with a Measurement System, we need to figure out how to correct it.

- If the % Rated both Way for one appraiser is high, that Appraiser may need training. Do they understand the characteristics they are looking for? Are the instructions clear to them? Do they have vision issues?

- If the Accuracy per appraiser is low, the Appraiser may have a different definition of the categories than the standard– A standardized definition can improve this situation (borderline catalog).

- If a disagreement occurs always on the same part, clarify the boundary.

- If improvements made, the study should be repeate to confirm improvements have worked.

How could we improve the Measurement System for our table?

Step 5 – Ongoing Evaluation and Future Actions

– All inspectors making this assessment in production need to validated with Attribute Gage R&R –> right assessment = validation of skill.

– Any new operator inspecting this part has to be validate with the Gage R&R.

– Frequency to revalidate inspectors has to be define.

– If a borderline catalog is changing (new defect, new boundary, …), Gage R&R has to be updated (new parts to evaluate the defect, …) and inspectors have to be re-assessed.

Conclusion:

The participants will now be able to :

Discuss the need for Attribute Measurement System Analysis.

Describe the types of Attribute Measurement System Analyses and when to use them.

Perform an Attribute Measurement System Analysis.